Threat Hunting - Suspicious User Agents | by mthcht | Detect FYI

What is a User-Agent ?

A User-Agent string is a line of text that a browser or application sends to a web server to identify itself. It typically includes the name and version of the browser/application, the operating system, and the language. It’s constructed as a list of product tokens (keywords) with optional comments that provide further detail. Tokens are typically separated by spaces, and comments are enclosed in parentheses. Each part of the User-Agent string helps the server determine how to deliver content in a compatible format for the client’s software environment.

Back in the early days of the internet, browsers competed for market share and began mimicking each other’s strings for compatibility. For example, “Mozilla/5.0 (…)” became a common prefix used by many browsers regardless of their relationship to Mozilla. Opera historically included “MSIE” in its User-Agent to bypass sites optimized exclusively for Internet Explorer.

These practices led to the complex and sometimes misleading User-Agent strings we see today.

Example:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36

Mozilla/5.0: Compatibility identifier (no longer meaningful).(Windows NT 10.0; Win64; x64): Operating system (Windows 10, 64-bit).AppleWebKit/537.36: Rendering engine.(KHTML, like Gecko): Compatibility tokens.Chrome/120.0.0.0: Browser and version.Safari/537.36: Safari compatibility token.

More info: https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/User-Agent

Sites to help parse/identify user-agents:

https://explore.whatismybrowser.com/useragents/parse/

https://useragentstring.com/

https://www.whatsmyua.info/

Why Detecting User-Agent Strings ?

Threat actors frequently alter or fabricate User-Agent strings to camouflage traffic within legitimate web requests. Some malware families use unique User-Agent strings when communicating with C2 servers (low false-positive signals), so monitoring unusual or known-malicious User-Agents can help with detection and hunting.

Malware examples

Raccoon Stealer:

Uses distinct HTTP User-Agent strings for C2 communications. Examples (from analyses and sample repositories) include strings like

iMightJustPayMySelfForAFeature,SouthSide, and others.Sample analyses: https://tria.ge/230404-kmka5adg89/behavioral2 and https://www.joesandbox.com/analysis/1342102/0/html

Bunny Loader:

A MaaS loader discussed on underground forums that also uses unique User-Agent strings.

Example strings and images in the original source.

I maintain a list of suspicious User-Agents on GitHub: https://github.com/mthcht/awesome-lists/blob/main/Lists/suspicious_http_user_agents_list.csv

For visibility, it’s recommended to route workstation traffic through a company proxy so web requests can be monitored and controlled.

List of Bad User-Agents

Repository (CSV of suspicious User-Agents): https://github.com/mthcht/awesome-lists/blob/main/Lists/suspicious_http_user_agents_list.csv?source=post_page-----3dd764470bd0---------------------------------------

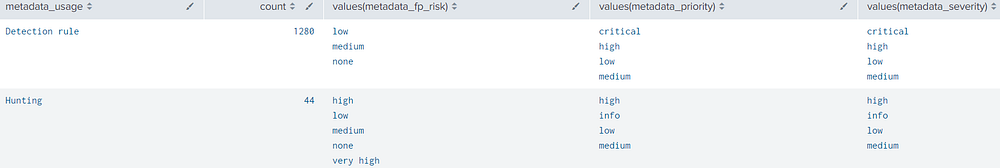

Structure of the CSV file:

http_user_agent: pattern to match (supports wildcards; case-insensitive)

metadata_description: description of the User-Agent

metadata_link: source or reference link

metadata_flow_direction: flow direction (emphasize internal > external detection)

metadata_category: category (C2, Malware, RMM, Compliance, Phishing, Vulnerability Scanner, Exploitation…)

metadata_priority: info → low → medium → high → critical

metadata_fp_risk: false positive risk (none → low → medium → high → very high)

metadata_severity: threat severity (info → low → medium → high → critical)

metadata_usage: recommended usage based on priority/fp/severity — either “Detection rule” (high-confidence rules) or “Hunting” (investigative only)

Detecting Threats inside your network

Required: HTTP proxy logs (internal → external flow).

Hunting with a suspicious User-Agent list

Required: Suspicious User-Agent List (CSV) https://github.com/mthcht/awesome-lists/blob/main/Lists/suspicious_http_user_agents_list.csv

Additional targeted hunts (examples):

LOLBIN:

C2:

Malware / RMM / Scanners — follow the same pattern by changing metadata_category and search filters.

High severity + low false positives:

Microsoft Sentinel example (KQL) — using the GitHub CSV as an external feed:

Analyze and investigate any matches found in your proxy logs and the suspicious User-Agent list.

Anomaly Detection in User-Agent Strings

If you prefer not to rely solely on an IOC list, here are hunts to detect anomalies in User-Agent strings.

Unusually long User-Agents

Hunt for User-Agent strings longer than a chosen threshold (example: 250 characters). Expect many false positives — refine with dest/url categorization, HTTP method, and known exclusions.

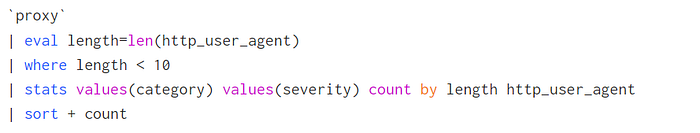

Unusually short User-Agents

Hunt for strings shorter than a threshold (example: < 10 characters). High false-positive rate; also catches empty user-agent values (in Splunk often unknown). Consider focusing on successful cloud sign-ins (e.g., Office 365) where empty UA is rare.

Note: Empty User-Agent is included in the CSV list.

Statistical outliers (length-based)

Use average and standard deviation to find UA strings that are statistical outliers:

eval length=len(http_user_agent)— length per eventeventstats avg(length) as avgLength, stdev(length) as stdevLengthFilter where length > avgLength + 3stdevLength OR length < avgLength − 4stdevLength

This method is slower but can produce more relevant results.

Multiple User-Agents from the same source in a short time

Detect sources presenting many different User-Agents in a short window (possible scanning/enumeration):

Example: more than 4 distinct User-Agents to the same domain (dest_host) in 10 minutes.

Warn about NAT/VIP environments where many users share an IP — consider using src_user vs src_ip appropriately.

Rarest User-Agents

Use Splunk’s rare command to identify the least common User-Agents:

https://docs.splunk.com/Documentation/SplunkCloud/latest/SearchReference/Rare

You can also try anomaly detection commands like anomalydetection on the http_user_agent field.

Additional reference: https://opstune.com/2020/09/16/tracking-rare-http-agent-context-rich-alerts-splunk/

Suspicious: Logged in with an empty user-agent

Hunt for successful authentications with empty/unknown User-Agent values in cloud logs (e.g., Office 365). These cases are rare and may indicate anomalous activity.

Compliance detections

Mismatch between User-Agent and Host OS

Required:

Proxy logs that include src IP or host OS information

CMDB or a Splunk lookup mapping src_ip to host OS

If a host identified as Windows (from CMDB or host telemetry) makes requests with a Linux User-Agent, investigate for potential virtualization, unmanaged VM, or compromise.

User-Agents indicating outdated or vulnerable browsers

Track known old User-Agent strings (for instance, Internet Explorer 8.0) to detect outdated browsers. This is typically a compliance task best handled by software management tools, but you can use UA parsing to find outdated versions.

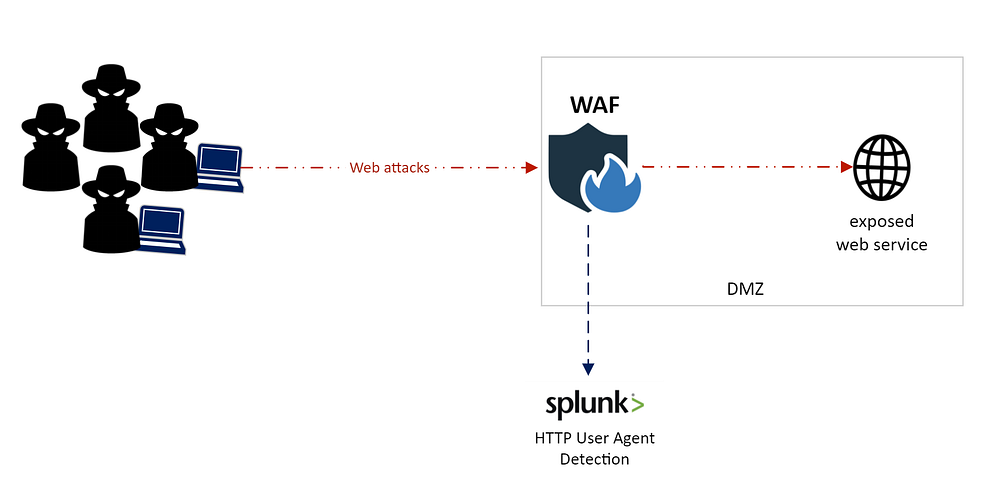

Logging External Threats

Required: WAF logs / Webserver access logs / Reverse proxy logs and Firewall logs

Constantly alerting on external web attacks (including suspicious User-Agents) can create overwhelming noise. A higher-signal approach:

Log external IPs associated with blocked attacks or suspicious User-Agents (from WAF, WAF logs, reverse proxy).

Only alert when:

An internal host connects to one of these flagged IP addresses; or

The attacker’s IP successfully connects to an exposed service; or

The flagged IP is associated with an email sender/recipient seen in your environment.

Illustration: logging external attacks with suspicious User-Agents using a WAF.

Apply this correlated approach across log types and external attack vectors rather than tracking all external UA anomalies.

Alerting criteria (examples)

Only generate a high-confidence alert if the attacker’s IP:

Successfully connected to any exposed service (AD, VPN, MAIL, SSH, cloud auth);

Is contacted by an internal source after the attack;

Is observed in email sender/recipient fields tied to internal events.

For TCP flag details in AWS VPC Flow Logs:

VPC Flow Logs: https://docs.aws.amazon.com/vpc/latest/userguide/flow-logs.html?source=post_page-----3dd764470bd0---------------------------------------#flow-logs-fields

TCP segment structure: https://en.wikipedia.org/wiki/Transmission_Control_Protocol#TCP_segment_structure

Use the same correlated strategy for other high-confidence attack patterns beyond User-Agent detection.

Conclusion

User-Agent string detection can yield high-confidence threat signals for SOC teams when used judiciously. It is particularly effective when combined with:

A curated list of suspicious User-Agents (for detection rules).

Statistical and behavioral anomaly hunts (for threat hunting).

Correlation with proxy/CMDB/host telemetry and firewall/WAF logs (to reduce false positives and increase confidence).

Happy hunting!

References and useful links:

Suspicious UA CSV: https://github.com/mthcht/awesome-lists/blob/main/Lists/suspicious_http_user_agents_list.csv?source=post_page-----3dd764470bd0---------------------------------------

MDN: https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/User-Agent

Raccoon sample analysis: https://tria.ge/230404-kmka5adg89/behavioral2

Joe Sandbox Raccoon analysis: https://www.joesandbox.com/analysis/1342102/0/html

Splunk

raredocs: https://docs.splunk.com/Documentation/SplunkCloud/latest/SearchReference/RareAWS VPC Flow Logs: https://docs.aws.amazon.com/vpc/latest/userguide/flow-logs.html?source=post_page-----3dd764470bd0---------------------------------------#flow-logs-fields

TCP segment structure: https://en.wikipedia.org/wiki/Transmission_Control_Protocol#TCP_segment_structure

(All links preserved as in the original content.)